Are you in need of more clarity in terms of the ever-developing field of Natural Language Processing? Good news! Avineon is launching a series of Natural Language Processing (NLP) blog posts to outline our success with Machine Learning and other Deep Learning endeavors.

For your benefit, the list of covered topics is illustrated here below:

- Data Rediscovered

- Learning Language

- What is Topic Modeling?

- Pay Attention! Comparing Text Utilizing Attention

- Speed Reading and the Value of Computer Language Processing

- Open to Possibilities. Is Machine Learning Right for Me?

These topics are geared towards those with little to no prior knowledge in the field but will aim to engage those of all knowledge levels. Whether you know only a few terms or whether you have built your own model, we look forward to embarking on this series with you!

The Speed you Read

Today’s entry is about the differences between human and machine reading. In the previous posts, we have discussed how machines conduct natural language processing. Now, we want to discuss why machines should be doing our reading.

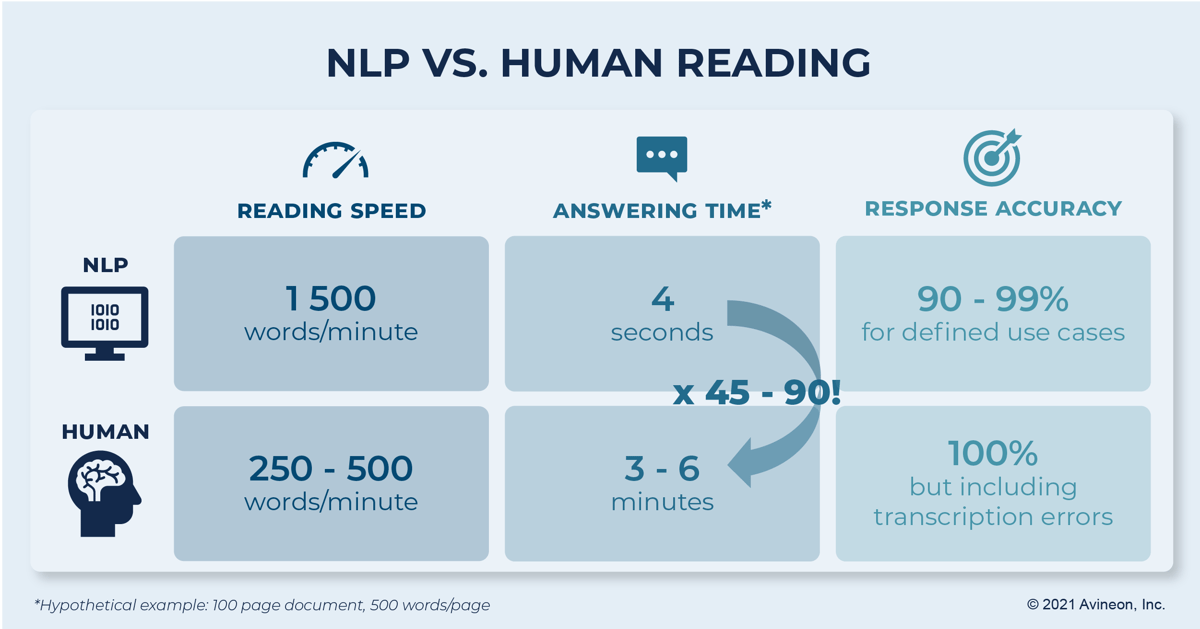

The metrics to consider are the time to read a document or text corpus and the accuracy when answering questions or completing a competency task based on the text.

Human Reading

We will do some quick napkin math to establish a baseline human reading speed and competency for a question and answering task. On average, people normally read somewhere between 250 to 500 words per minute. These numbers are unique to an individual, but the range gives us a frame to begin our calculation. If a page averages 500 words and a document 100 pages, then it takes about 100 minutes for a reader with 500 words per minute to complete the document and twice as long for her colleague who reads at 250 words per minute.

The astute reader will immediately note that no person in their right mind would read the 100-page document to answer an individual question. You would skim the text or use a table of contents to get close to an answer and then read at most a few pages to find the response. Saying that you narrow it down to three pages and neglecting the time it took to find those pages, you are looking at about three minutes to answer a question at the fastest. Our hypothetical readers in this example operate at 100% accuracy, but there are some questions regarding comprehension errors, transcription errors, and misreading of the text.

Natural Language Processing

Now let us evaluate the speed at which NLP solutions operate. Processing 500 words of text and answering a question, on average, takes a model about two seconds to complete with the same accuracy as a human reader. Scaling the document size up to our 100-page document (50,000 words) is where interesting solutions take place. Without bogging down too much in the technical details, the speed of a given NLP solution depends on the architecture and the task. Across all NLP solutions, an increase in the amount of text to process will result in an increased time to evaluate.

What is less intuitive and more important is how the architecture of the solution plays a factor into this equation. In some NLP techniques, the size of the context affects the amount of computation squared! Our 500-word text is really 250,000 numbers to compute, and our 50,000-word text is 2.5 billion numbers to compute at that step in the model. Therefore, layered solutions are incredibly important.

We can fix this problem because if we place a topic modeling layer to begin and we sort all the paragraphs out, we can reduce the text size dramatically. Now, the system checks for similar paragraphs and then only reads the paragraphs its most confident has the answer. In effect, the system is skimming the text like our human reader. Using this technique, the topic modeler (which is an incredibly fast index of the paragraphs) takes two seconds to parse all paragraphs and the question and answering system takes another two seconds to read that text chunk. The combined NLP solution takes four seconds to complete the task.

Comparing the two readers, our machine example operates about 45 times faster than our fastest human reader and 90 times our slowest. The question remaining is how accurate are the machine responses? That, as always, depends on the task and the documents that need to be processed. Based on newest research and testing, on average, the responses will be somewhere between 90% and 99% accurate for specific questions that have an answer somewhere in the text. That number range fluctuates with the types of documents and the difficulty of the questions. The NLP solution will always be better than humans in the transcription error category. This accuracy range continues to increase as new technology and techniques are researched, but this is not the end of our story.

A Semi-Automated Approach

If you want the best of both worlds, accuracy and speed, a middle ground does exist. A semi-automated approach would allow human readers to verify answers but not have to find them, answer questions but not have to understand them, and facilitate updating databases without granting direct access to them.

While we will not see speeds of 45 to 90 times that of a human reader, we still eliminate the transcription errors, answer our questions to our task’s satisfaction, and streamline an otherwise unstructured process.