Are you in need of more clarity in terms of the ever-developing field of Natural Language Processing? Good news! Avineon is launching a series of Natural Language Processing (NLP) blog posts to outline our success with Machine Learning and other Deep Learning endeavors.

For your benefit, the list of covered topics is illustrated here below:

- Data Rediscovered

- Learning Language

- What is Topic Modeling?

- Pay Attention! Comparing Text Utilizing Attention

- Speed Reading and the Value of Computer Language Processing

- Open to Possibilities. Is Machine Learning Right for Me?

These topics are geared towards those with little to no prior knowledge in the field but will aim to engage those of all knowledge levels. Whether you know only a few terms or whether you have built your own model, we look forward to embarking on this series with you!

Comparing Text and Answering Questions

When you read a document and then answer questions about the document, how do you know what the answers are? Do you look for keywords, compare ideas, or infer meaning? This concept is the foundation at the core of every question and answering (Q&A) system. An answer only makes sense if both pieces of text are present: context without a question yields no result and a question without context yields no useful result. So, the two pieces of text must interact fundamentally. Modeling that interaction is the key to any question and answering system, and part of Avineon’s natural language processing (NLP) capabilities.

How text interacts with another piece of text is not only limited to answering questions. It could be used to sort documents or to categorize descriptions of work. Most problems that consist of two or more pieces of text that need to be compared can be modeled with a variation of an attention mechanism or a similar technology. An attention mechanism is a complex way to say that the text is “looking” at another piece of text and being compared against it.

The easiest way to describe attention is to think of how the pronoun, “it,” works in English. In our case, the pronoun, “it,” refers to another word in the sentence or a previous sentence. The word, “it,” carries a meaning from another word or phrase earlier in the text. Now, if you extend that idea to all the words in the text, where all the ideas from one word are carried on to the next word, you get the general idea of attention.

Question and Answering System

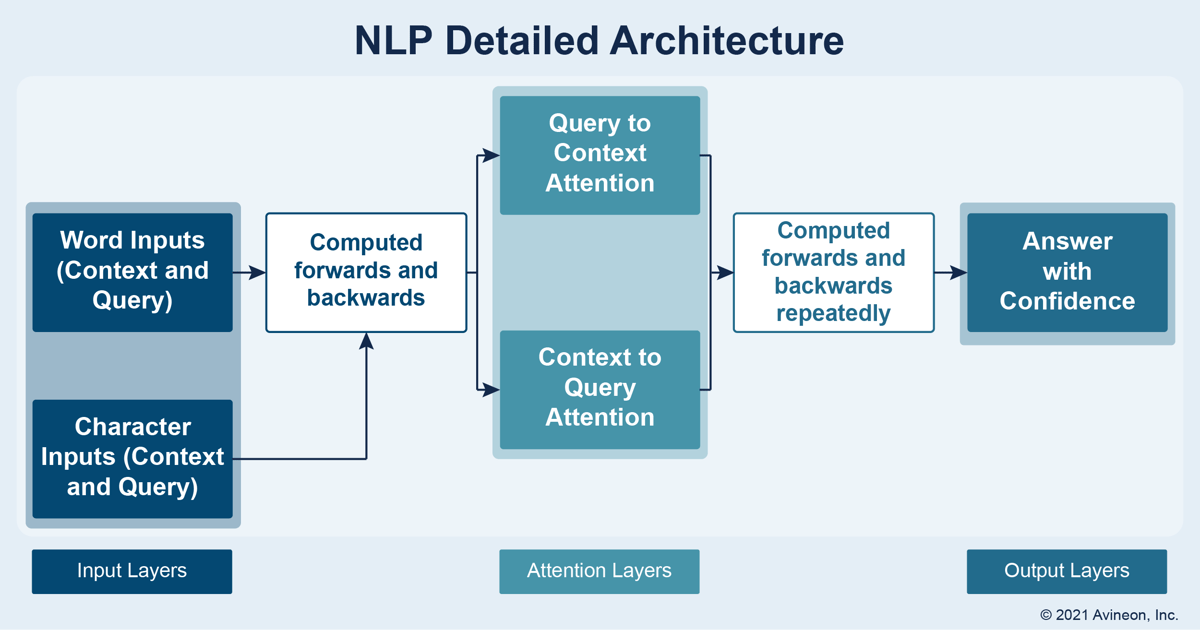

One of Avineon’s NLP capabilities, our question and answering system, uses attention along with other state of the art NLP techniques and technologies to look at large text corpuses and to draw inferences and connections quickly and accurately. By inputting two pieces of text, whether they come in the form of a document and a question or two documents, the system can identify key relationships or answer the inputted question using the text itself with a percentage confidence in its answer.

On a large scale, the system can compare text quickly and accurately, scanning entire documents forwards and backwards (often referred to as “bidirectional attention”). The below illustration displays an outline of a question and answering solution where a document and a question are being inputted and the answer to the question, found within the document, is outputted. If no answer exists within a confidence boundary, the user is informed that their answer likely does not exist in the document.

Natural Language Processing

As the outline above shows, several key pieces of NLP technology can be layered on top of each other to form more complete solutions. Each step of a layered solution can be tweaked, modulated, or even removed depending on the problem at hand. These nuances require specific consideration and are exactly what Avineon is keen to pay attention to.